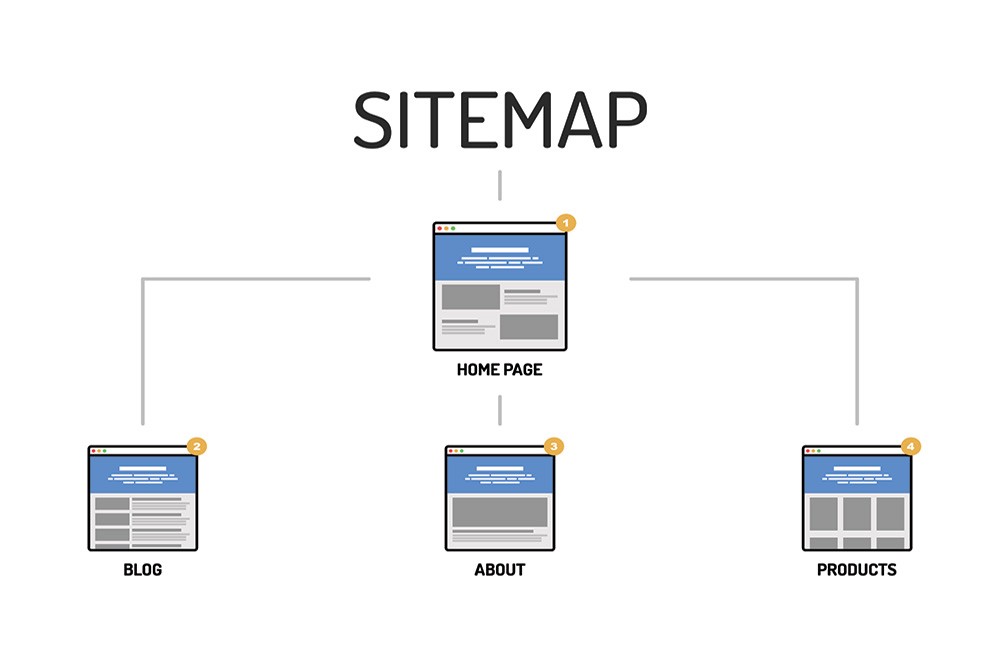

The great advantage of a sitemap (for SEO purposes) comes from its ability to reduce the number of links that must be followed in order to reach all pages on a site.

Sitemaps have a remarkable capability to garner the attention of search engine spiders and crawlers – making for much faster indexing, and thus, faster rankings.

The boost provided by sitemaps hasn’t been measured in full, but many SEOs suspect that bots may have an innate ability and preference for spidering sitemaps. Several rules control the construction and maintenance of sitemaps to achieve optimal benefits:

- Make sure your sitemap is linked to every page on the site (this ensures even distribution and increases the frequency of spidering.

- Don’t have more than 200 links on a sitemap page. On Google’s ancient recommendations page, this number is 100, but reports from around the SEO community indicate there is no detriment to having up to 200 links on the page.

- Try to refrain from external linking on the sitemap page. For both users and spiders, the sitemap has an established purpose as the index of your website’s own pages. Externally linking is a detractor.

Which pages to exclude in your sitemap?

You need to exclude certain pages from your sitemap by default. These pages have been listed below.

- Non-canonical pages

- Duplicate pages

- Paginated pages

- Parameterized URLs

- Site search result pages

- URLs created by filtering options

- Archive pages

- Any redirections (3xx), missing pages (4xx) or server error pages (5xx)

- Pages blocked by robots.txt

- Pages with no-index

- Pages accessible by a lead gen form (PDFs, etc.)

- Utility pages (login page, wishlist/cart pages, etc.)